The work discussed in this blog was presented to the COVID-19 Mobility Data Network on August 14, 2020, as part of regular weekly meetings that aim to share information, analytic tools, and best practices across the network of researchers.

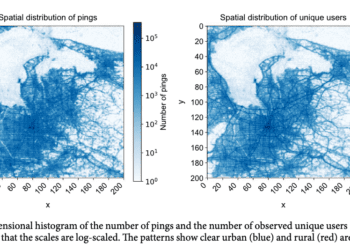

The near real-time information about human movement provided by aggregated population mobility data has tremendous potential to help refine interventions when appropriate legal, organizational, and computational safeguards are in place. As the private sector, policymakers, and academia work together to leverage novel sources of data to track the spread of the pandemic, Randall Harp, Laurent Hébert-Dufresne, and Juniper Lovato from the University of Vermont argue that anonymization at the individual level is insufficient– the notion of privacy should extend to communities as well. Information that identifies characteristics at the community level can also lead to “finger-pointing”, and disproportionately highlight marginalized groups in a large population. Ideally, one would want to add noise at the community-level to anonymize, for example, towns, balancing obfuscation of sensitive, identifiable data, with the loss of other information that may also compromise the information of public health interest.

The team observed that the presumption of individual control over personal data, and the assumption that consent is informed may no longer be valid in the context of large data sets and sophisticated, networked systems. The evolving complexities of data processing, the volume of consent needed to completely opt-out of personal data use within a complex system, and the broad-stroke terms of service that users typically consent to, often permit flexible data use that individuals may not have considered. Furthermore, in instances where the collected anonymized individual-level data are leaky, frameworks in place for individual privacy stop being protective at the population level – populations that haven’t consented to their data being collected may still be implicated in its use.

Academia is a common source of leaky data. As researchers from CMDN discuss the different procedures they need to complete to comply with the requirements of traditional research ethics, it is evident that the existing systems of ethical review by institutional review boards (IRB) need to be updated to fit the kind of data being generated right now. The UVM team calls for the academic community to think about the regulations and structures required to equip the IRB with the mechanisms to determine the suitability of proposed analytics for these new large, complex, networked datasets. The current practice of requesting “Non-human subjects Research” exemptions/waivers in health data science, is no longer appropriate.

Network participants explored alternative models: As mobility data morph through the analytical pipeline, there is a need to consider alternatives to the heavy, content-driven architectures, and shift toward Privacy by Design frameworks, or architectures that incorporate a fiduciary responsibility within the system. Should there be a legal framework for data processors to act in a fiduciary capacity? For this to happen, there is a need to create professional guidelines and field-specific standards that would make this a feasible alternative. Current lacunae require that the use of such data retain a human in the loop, to avoid the pitfalls of black-box algorithms in machine learning.

You can find more information about the team’s work on distributed consent frameworks for data privacy in their recently published manuscript here, and learn more about the other research taking place at The Vermont Complex Systems Center here.